Run Open-Source LLMs Offline in 2025 — Private, Fast & Free

📅August 2, 2025

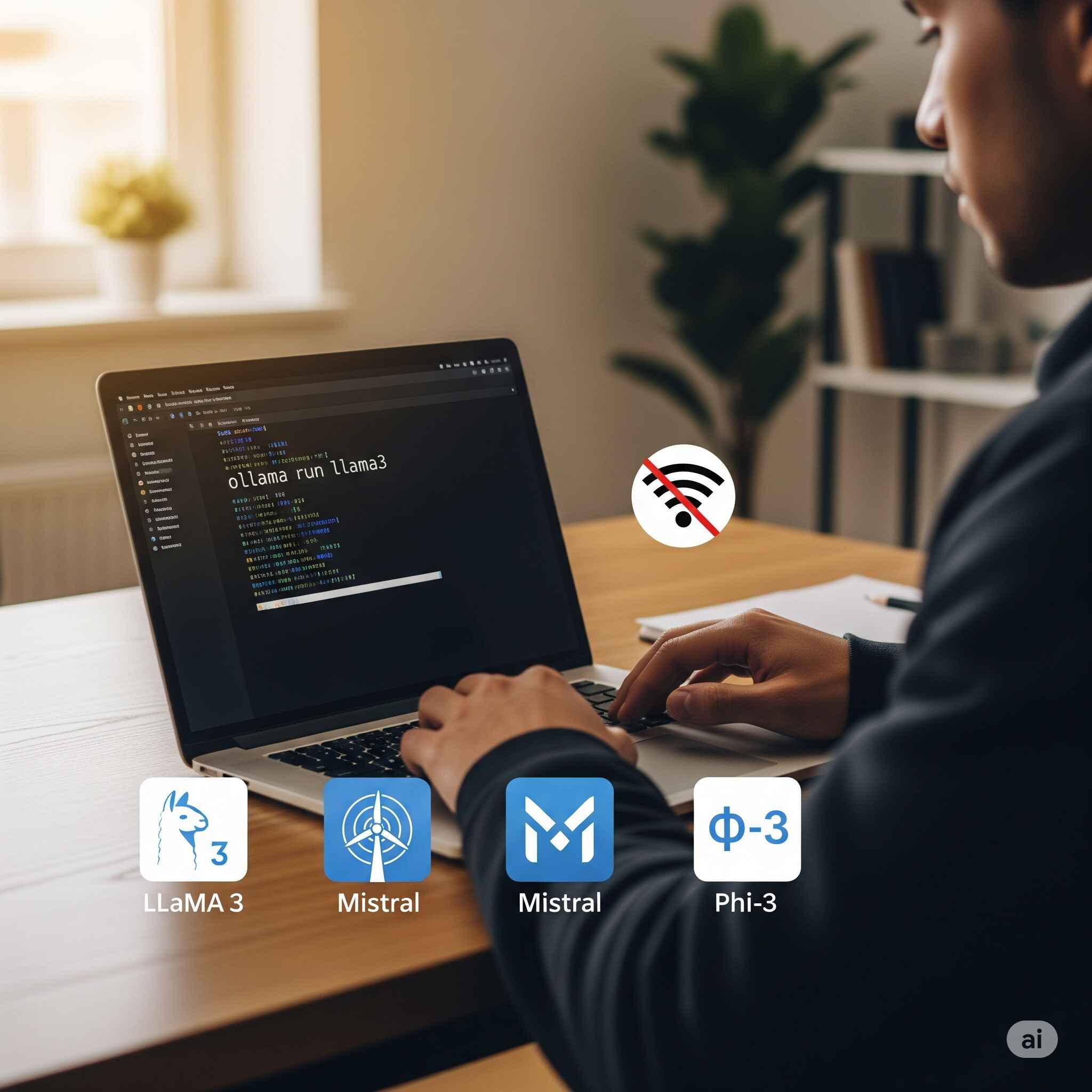

Running large language models (LLMs) offline is no longer just for researchers — it's now easy, free, and private. Whether you're a developer, student, or privacy enthusiast, offline LLMs give you ChatGPT-level AI without needing an internet connection or API key.

In this guide, you'll learn how to run open-source LLMs locally, which tools to use, hardware requirements, and exactly why this is worth doing in 2025.

🚀 Why Run LLMs Offline?

Running LLMs locally gives you:

✅ Full privacy — your data never leaves your device

✅ Zero cost per request — no API charges or rate limits

✅ Customization freedom — choose models, tweak behaviors

✅ Offline access — perfect for secure or air-gapped systems

If you're building apps, assistants, or just want AI on your terms, offline LLMs are the future.

Best Open-Source LLMs for Offline Use (2025)

Here are some of the top-performing, fully free LLMs you can run locally:

Model

Size

Features

LLaMA 3 (Meta)

8B / 70B

High-quality, open weights, widely supported

Mistral 7B / Mixtral

7B / Mixture of Experts

Fast, multilingual, open license

Phi-3 (Microsoft)

3.8B / 14B

Tiny but surprisingly capable

Gemma (Google)

2B / 7B

Lightweight, clean instruction-tuning

TinyLlama

1.1B

Designed for ultra-low-resource systems

📝 Most of these support GGUF format for quantized (compressed) performance.

What You’ll Need (Hardware Requirements)

Minimum setup to run 3–7B models:

CPU: Modern 4-core (Intel i5+ / Ryzen 5+)

RAM: At least 8–16GB for smooth usage

Disk: 5–20 GB per model file (depending on quantization)

GPU (optional): NVIDIA (6GB+ VRAM) or Apple M1/M2/M3 for better performance

You can still run small models on entry-level laptops, especially with tools like Ollama or LM Studio.

Ollama is the simplest way to run LLMs offline. It auto-installs and configures models behind the scenes.

Also learn how to setup: n8n Automation to Facebook & X.

🛠️ Steps:

Install Ollama:

curl -fsSL https://ollama.com/install.sh | shor use

.exefor Windows.Run a model (e.g., Mistral):

ollama run mistralStart chatting directly in your terminal.

✅ Supports models like

llama3,phi3,gemma, and custom ones too.

You can also connect it with tools like LangChain or Flowise for full AI agents.

.

🧑💻 GUI Option: LM Studio (No-Code AI Chat)

If you prefer a graphical interface, LM Studio lets you download and run models with no terminal required.

✅ Drag & drop

.ggufmodels✅ Chat directly in a local app

✅ Full offline usage

Great for writers, researchers, or casual users who want ChatGPT-like interaction locally.

🔄 Advanced Workflow: Ollama + LangChain + Vector Search

Build a full local AI assistant:

Ollama → Runs your LLM offline

LangChain → Orchestrates tools (memory, RAG, APIs)

Chroma / Weaviate → Local vector DBs for search

Tauri / Electron → Package your own AI desktop app

🧠 Ideal for developers building custom copilots or document assistants.

Check LangChain’s Ollama guide to get started.

Performance Tips

Use quantized models (like

Q4_0,Q6_K) for lower RAM usePrefer Mistral or Phi-3 for speed on CPUs

Use Apple M-chips (M2/M3) for best-in-class local performance

Don’t run 13B+ models unless you have 32GB RAM or GPU support.

🔐 Privacy & Security Perks

Unlike cloud AI, offline LLMs:

❌ Don’t log your data

❌ Don’t require sign-ins

✅ Let you build air-gapped systems

✅ Are ideal for sensitive projects (legal, research, etc.)

Even governments and enterprises are moving toward self-hosted LLMs for these reasons.

🧠 Final Thoughts: Who Should Use Offline LLMs?

You should consider running open-source LLMs offline if you are:

A developer building secure AI tools

A writer/researcher wanting private assistance

A student exploring AI without needing an API

An indie hacker or startup avoiding OpenAI/Gemini lock-in

In 2025, with tools like Ollama, LM Studio, and LangChain, offline AI is no longer just possible — it's powerful.